layout_detection.en.md 43 KB

comments: true

Layout Detection Module Tutorial

I. Overview

The core task of structure analysis is to parse and segment the content of input document images. By identifying different elements in the image (such as text, charts, images, etc.), they are classified into predefined categories (e.g., pure text area, title area, table area, image area, list area, etc.), and the position and size of these regions in the document are determined.

II. Supported Model List

The inference time only includes the model inference time and does not include the time for pre- or post-processing.

The layout detection model includes 20 common categories: document title, paragraph title, text, page number, abstract, table, references, footnotes, header, footer, algorithm, formula, formula number, image, table, seal, figure_table title, chart, and sidebar text and lists of references

Model Model Download Link mAP(0.5) (%) GPU Inference Time (ms)

[Normal Mode / High-Performance Mode]CPU Inference Time (ms)

[Normal Mode / High-Performance Mode]Model Storage Size (MB) Introduction PP-DocLayout_plus-L Inference Model/Training Model 83.2 53.03 / 17.23 634.62 / 378.32 126.01 A higher-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L The layout detection model includes 1 category: Block:

Model Model Download Link mAP(0.5) (%) GPU Inference Time (ms)

[Normal Mode / High-Performance Mode]CPU Inference Time (ms)

[Normal Mode / High-Performance Mode]Model Storage Size (MB) Introduction PP-DocBlockLayout Inference Model/Training Model 95.9 34.60 / 28.54 506.43 / 256.83 123.92 A layout block localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L The layout detection model includes 23 common categories: document title, paragraph title, text, page number, abstract, table of contents, references, footnotes, header, footer, algorithm, formula, formula number, image, figure title, table, table title, seal, chart title, chart, header image, footer image, and sidebar text

Model Model Download Link mAP(0.5) (%) GPU Inference Time (ms)

[Normal Mode / High-Performance Mode]CPU Inference Time (ms)

[Normal Mode / High-Performance Mode]Model Storage Size (MB) Introduction PP-DocLayout-L Inference Model/Training Model 90.4 33.59 / 33.59 503.01 / 251.08 123.76 A high-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using RT-DETR-L. PP-DocLayout-M Inference Model/Training Model 75.2 13.03 / 4.72 43.39 / 24.44 22.578 A layout area localization model with balanced precision and efficiency, trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-L. PP-DocLayout-S Inference Model/Training Model 70.9 11.54 / 3.86 18.53 / 6.29 4.834 A high-efficiency layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-S. - Performance Test Environment

- Test Dataset:

- Layout Detection Model: A self-built layout area detection dataset by PaddleOCR, containing 500 common document type images such as Chinese and English papers, magazines, contracts, books, exam papers, and research reports.

- Table Layout Detection Model: A self-built table area detection dataset by PaddleOCR, including 7,835 Chinese and English paper document type images with tables.

- 3-Class Layout Detection Model: A self-built layout area detection dataset by PaddleOCR, comprising 1,154 common document type images such as Chinese and English papers, magazines, and research reports.

- 5-Class English Document Area Detection Model: The evaluation dataset of PubLayNet, containing 11,245 images of English documents.

- 17-Class Area Detection Model: A self-built layout area detection dataset by PaddleOCR, including 892 common document type images such as Chinese and English papers, magazines, and research reports.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Software Environment:

- Ubuntu 20.04 / CUDA 11.8 / cuDNN 8.9 / TensorRT 8.6.1.6

- paddlepaddle 3.0.0 / paddlex 3.0.3

- Test Dataset:

- Inference Mode Description

❗ The above list includes the 4 core models that are key supported by the text recognition module. The module actually supports a total of 12 full models, including several predefined models with different categories. The complete model list is as follows:

👉 Details of Model List

* Table Layout Detection Model| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x_table | Inference Model/Training Model | 97.5 | 9.57 / 6.63 | 27.66 / 16.75 | 7.4 | A high-efficiency layout area localization model trained on a self-built dataset using PicoDet-1x, capable of detecting table regions. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_3cls | Inference Model/Training Model | 88.2 | 8.43 / 3.44 | 17.60 / 6.51 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_3cls | Inference Model/Training Model | 89.0 | 12.80 / 9.57 | 45.04 / 23.86 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

| RT-DETR-H_layout_3cls | Inference Model/Training Model | 95.8 | 114.80 / 25.65 | 924.38 / 924.38 | 470.1 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x | Inference Model/Training Model | 97.8 | 9.62 / 6.75 | 26.96 / 12.77 | 7.4 | A high-efficiency English document layout area localization model trained on the PubLayNet dataset using PicoDet-1x. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_17cls | Inference Model/Training Model | 87.4 | 8.80 / 3.62 | 17.51 / 6.35 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_17cls | Inference Model/Training Model | 89.0 | 12.60 / 10.27 | 43.70 / 24.42 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

| RT-DETR-H_layout_17cls | Inference Model/Training Model | 98.3 | 115.29 / 101.18 | 964.75 / 964.75 | 470.2 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Normal Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of pre-selected precision types and acceleration strategies | FP32 Precision / 8 Threads | Pre-selected optimal backend (Paddle/OpenVINO/TRT, etc.) |

III. Quick Integration

❗ Before quick integration, please install the PaddleX wheel package. For detailed instructions, refer to PaddleX Local Installation Tutorial

After installing the wheel package, a few lines of code can complete the inference of the structure analysis module. You can switch models under this module freely, and you can also integrate the model inference of the structure analysis module into your project. Before running the following code, please download the demo image to your local machine.

from paddlex import create_model

model_name = "PP-DocLayout_plus-L"

model = create_model(model_name=model_name)

output = model.predict("layout.jpg", batch_size=1, layout_nms=True)

for res in output:

res.print()

res.save_to_img(save_path="./output/")

res.save_to_json(save_path="./output/res.json")

Note: The official models would be download from HuggingFace by first. PaddleX also support to specify the preferred source by setting the environment variable PADDLE_PDX_MODEL_SOURCE. The supported values are huggingface, aistudio, bos, and modelscope. For example, to prioritize using bos, set: PADDLE_PDX_MODEL_SOURCE="bos".

👉 After running, the result is: (Click to expand)

```bash {'res': {'input_path': 'layout.jpg', 'page_index': None, 'boxes': [{'cls_id': 2, 'label': 'text', 'score': 0.9870226979255676, 'coordinate': [34.101906, 349.85275, 358.59213, 611.0772]}, {'cls_id': 2, 'label': 'text', 'score': 0.9866003394126892, 'coordinate': [34.500324, 647.1585, 358.29367, 848.66797]}, {'cls_id': 2, 'label': 'text', 'score': 0.9846674203872681, 'coordinate': [385.71445, 497.40973, 711.2261, 697.84265]}, {'cls_id': 8, 'label': 'table', 'score': 0.984126091003418, 'coordinate': [73.76879, 105.94899, 321.95303, 298.84888]}, {'cls_id': 8, 'label': 'table', 'score': 0.9834211468696594, 'coordinate': [436.95642, 105.81531, 662.7168, 313.48462]}, {'cls_id': 2, 'label': 'text', 'score': 0.9832247495651245, 'coordinate': [385.62787, 346.2288, 710.10095, 458.77127]}, {'cls_id': 2, 'label': 'text', 'score': 0.9816061854362488, 'coordinate': [385.7802, 735.1931, 710.56134, 849.9764]}, {'cls_id': 6, 'label': 'figure_title', 'score': 0.9577341079711914, 'coordinate': [34.421448, 20.055151, 358.71283, 76.53663]}, {'cls_id': 6, 'label': 'figure_title', 'score': 0.9505634307861328, 'coordinate': [385.72278, 20.053688, 711.29333, 74.92744]}, {'cls_id': 0, 'label': 'paragraph_title', 'score': 0.9001723527908325, 'coordinate': [386.46344, 477.03488, 699.4023, 490.07474]}, {'cls_id': 0, 'label': 'paragraph_title', 'score': 0.8845751285552979, 'coordinate': [35.413048, 627.73596, 185.58383, 640.52264]}, {'cls_id': 0, 'label': 'paragraph_title', 'score': 0.8837394118309021, 'coordinate': [387.17603, 716.3423, 524.7841, 729.258]}, {'cls_id': 0, 'label': 'paragraph_title', 'score': 0.8508939743041992, 'coordinate': [35.50064, 331.18445, 141.6444, 344.81097]}]}} ``` The meanings of the parameters are as follows: - `input_path`: The path to the input image for prediction. - `page_index`: If the input is a PDF file, it indicates which page of the PDF it is; otherwise, it is `None`. - `boxes`: Information about the predicted bounding boxes, a list of dictionaries. Each dictionary represents a detected object and contains the following information: - `cls_id`: Class ID, an integer. - `label`: Class label, a string. - `score`: Confidence score of the bounding box, a float. - `coordinate`: Coordinates of the bounding box, a list of floats in the format[xmin, ymin, xmax, ymax].

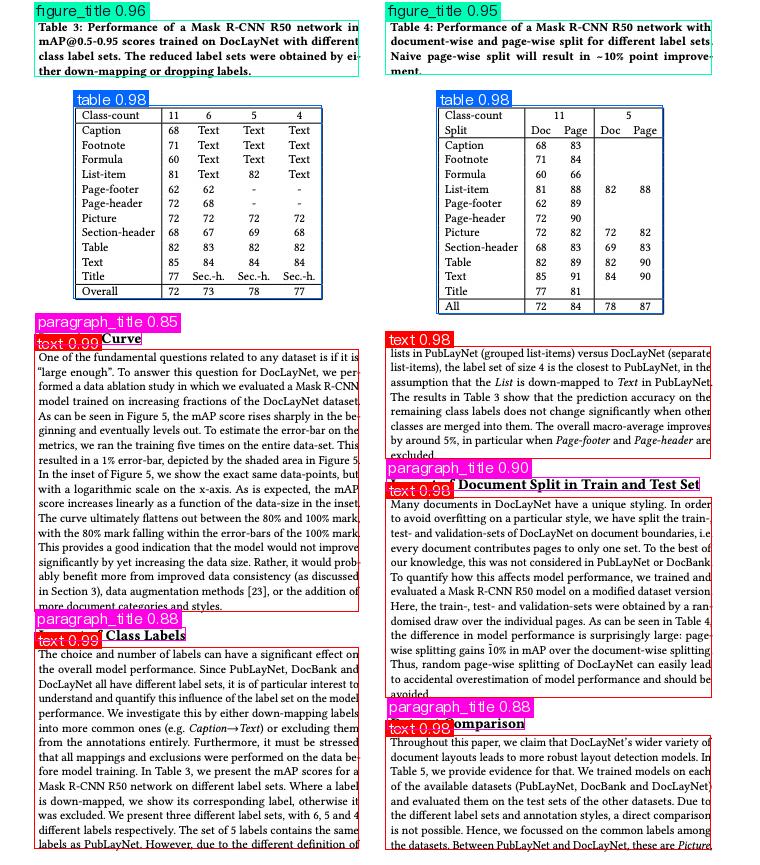

The visualized image is as follows:

Relevant methods, parameters, and explanations are as follows:

create_modelinstantiates a target detection model (here,PP-DocLayout_plus-Lis used as an example). The detailed explanation is as follows:Parameter Description Type Options Default Value model_nameName of the model strNone None model_dirPath to store the model strNone None deviceThe device used for model inference strIt supports specifying specific GPU card numbers, such as "gpu:0", other hardware card numbers, such as "npu:0", or CPU, such as "cpu". gpu:0img_sizeSize of the input image; if not specified, the default PaddleX official model configuration will be used int/list/None- int, e.g., 640, means resizing the input image to 640x640

- List, e.g., [640, 512], means resizing the input image to a width of 640 and a height of 512

- None, not specified, will use the default PaddleX official model configuration

None thresholdThreshold for filtering low-confidence prediction results; if not specified, the default PaddleX official model configuration will be used float/dict/None- float, e.g., 0.2, means filtering out all bounding boxes with a confidence score less than 0.2

- Dictionary, with keys as int representing

cls_idand values as float thresholds. For example,{0: 0.45, 2: 0.48, 7: 0.4}means applying a threshold of 0.45 for cls_id 0, 0.48 for cls_id 2, and 0.4 for cls_id 7 - None, not specified, will use the default PaddleX official model configuration

None layout_nmsWhether to use NMS post-processing to filter overlapping boxes; if not specified, the default PaddleX official model configuration will be used bool/None- bool, True/False, indicates whether to use NMS for post-processing to filter overlapping boxes

- None, not specified, will use the default PaddleX official model configuration

None layout_unclip_ratioScaling factor for the side length of the detection box; if not specified, the default PaddleX official model configuration will be used float/list/dict/None- float, a positive float number, e.g., 1.1, means expanding the width and height of the detection box by 1.1 times while keeping the center unchanged

- List, e.g., [1.2, 1.5], means expanding the width by 1.2 times and the height by 1.5 times while keeping the center unchanged

- dict, keys as int representing

cls_id, values as float scaling factors, e.g.,{0: (1.1, 2.0)}means cls_id 0 expanding the width by 1.1 times and the height by 2.0 times while keeping the center unchanged - None, not specified, will use the default PaddleX official model configuration

layout_merge_bboxes_modeMerging mode for the detection boxes output by the model; if not specified, the default PaddleX official model configuration will be used string/dict/None- large, when set to large, only the largest external box will be retained for overlapping detection boxes, and the internal overlapping boxes will be deleted

- small, when set to small, only the smallest internal box will be retained for overlapping detection boxes, and the external overlapping boxes will be deleted

- union, no filtering of boxes will be performed, and both internal and external boxes will be retained

- dict, keys as int representing

cls_idand values as merging modes, e.g.,{0: "large", 2: "small"} - None, not specified, will use the default PaddleX official model configuration

None use_hpipWhether to enable the high-performance inference plugin boolNone Falsehpi_configHigh-performance inference configuration dict|NoneNone NoneNote that

model_namemust be specified. After specifyingmodel_name, the default PaddleX built-in model parameters will be used. Ifmodel_diris specified, the user-defined model will be used.The

predict()method of the target detection model is called for inference prediction. The parameters of thepredict()method areinput,batch_size, andthreshold, which are explained as follows:Parameter Description Type Options Default Value inputData for prediction, supporting multiple input types Python Var/str/list- Python Variable, such as image data represented by

numpy.ndarray - File Path, such as the local path of an image file:

/root/data/img.jpg - URL link, such as the network URL of an image file: 示例

- Local Directory, the directory should contain the data files to be predicted, such as the local path:

/root/data/ - List, the elements of the list should be of the above-mentioned data types, such as

[numpy.ndarray, numpy.ndarray],[\"/root/data/img1.jpg\", \"/root/data/img2.jpg\"],[\"/root/data1\", \"/root/data2\"]

None batch_sizeBatch size intAny integer greater than 0 1 thresholdThreshold for filtering low-confidence prediction results float/dict/None- float, e.g., 0.2, means filtering out all bounding boxes with a confidence score less than 0.2

- Dictionary, with keys as int representing

cls_idand values as float thresholds. For example,{0: 0.45, 2: 0.48, 7: 0.4}means applying a threshold of 0.45 for cls_id 0, 0.48 for cls_id 2, and 0.4 for cls_id 7 - None, not specified, will use the

thresholdparameter specified increate_model. If not specified increate_model, the default PaddleX official model configuration will be used

layout_nmsWhether to use NMS post-processing to filter overlapping boxes; if not specified, the default PaddleX official model configuration will be used bool/None- bool, True/False, indicates whether to use NMS for post-processing to filter overlapping boxes

- None, not specified, will use the

layout_nmsparameter specified increate_model. If not specified increate_model, the default PaddleX official model configuration will be used

None layout_unclip_ratioScaling factor for the side length of the detection box; if not specified, the default PaddleX official model configuration will be used float/list/dict/None- float, a positive float number, e.g., 1.1, means expanding the width and height of the detection box by 1.1 times while keeping the center unchanged

- List, e.g., [1.2, 1.5], means expanding the width by 1.2 times and the height by 1.5 times while keeping the center unchanged

- dict, keys as int representing

cls_id, values as float scaling factors, e.g.,{0: (1.1, 2.0)}means cls_id 0 expanding the width by 1.1 times and the height by 2.0 times while keeping the center unchanged - None, not specified, will use the

layout_unclip_ratioparameter specified increate_model. If not specified increate_model, the default PaddleX official model configuration will be used

layout_merge_bboxes_modeMerging mode for the detection boxes output by the model; if not specified, the default PaddleX official model configuration will be used string/dict/None- large, when set to large, only the largest external box will be retained for overlapping detection boxes, and the internal overlapping boxes will be deleted

- small, when set to small, only the smallest internal box will be retained for overlapping detection boxes, and the external overlapping boxes will be deleted

- union, no filtering of boxes will be performed, and both internal and external boxes will be retained

- dict, keys as int representing

cls_idand values as merging modes, e.g.,{0: "large", 2: "small"} - None, not specified, will use the

layout_merge_bboxes_modeparameter specified increate_model. If not specified increate_model, the default PaddleX official model configuration will be used

None - Additionally, it also supports obtaining the visualized image with results and the prediction results via attributes, as follows:

Attribute Description jsonGet the prediction result in jsonformatimgGet the visualized image in dictformatFor more information on using PaddleX's single-model inference API, refer to PaddleX Single Model Python Script Usage Instructions.

IV. Custom Development

If you seek higher accuracy from existing models, you can use PaddleX's custom development capabilities to develop better structure analysis models. Before developing a structure analysis model with PaddleX, ensure you have installed PaddleX's Detection-related model training capabilities. The installation process can be found in PaddleX Local Installation Tutorial.

4.1 Data Preparation

Before model training, you need to prepare the corresponding dataset for the task module. PaddleX provides a data validation function for each module, and only data that passes the validation can be used for model training. Additionally, PaddleX provides demo datasets for each module, which you can use to complete subsequent development based on the official demos. If you wish to use private datasets for subsequent model training, refer to the PaddleX Object Detection Task Module Data Annotation Tutorial.

4.1.1 Demo Data Download

You can use the following commands to download the demo dataset to a specified folder:

cd /path/to/paddlex wget https://paddle-model-ecology.bj.bcebos.com/paddlex/data/det_layout_examples.tar -P ./dataset tar -xf ./dataset/det_layout_examples.tar -C ./dataset/4.1.2 Data Validation

A single command can complete data validation:

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=check_dataset \ -o Global.dataset_dir=./dataset/det_layout_examplesAfter executing the above command, PaddleX will validate the dataset and collect its basic information. Upon successful execution, the log will print the message

Check dataset passed !. The validation result file will be saved in./output/check_dataset_result.json, and related outputs will be saved in the./output/check_datasetdirectory of the current directory. The output directory includes visualized example images and histograms of sample distributions.👉 Validation Result Details (Click to Expand)

The specific content of the validation result file is:

{ "done_flag": true, "check_pass": true, "attributes": { "num_classes": 11, "train_samples": 90, "train_sample_paths": [ "check_dataset/demo_img/JPEGImages/train_0077.jpg", "check_dataset/demo_img/JPEGImages/train_0028.jpg", "check_dataset/demo_img/JPEGImages/train_0012.jpg" ], "val_samples": 20, "val_sample_paths": [ "check_dataset/demo_img/JPEGImages/val_0007.jpg", "check_dataset/demo_img/JPEGImages/val_0019.jpg", "check_dataset/demo_img/JPEGImages/val_0010.jpg" ] }, "analysis": { "histogram": "check_dataset/histogram.png" }, "dataset_path": "det_layout_examples", "show_type": "image", "dataset_type": "COCODetDataset" }The verification results mentioned above indicate that

check_passbeingTruemeans the dataset format meets the requirements. Details of other indicators are as follows:attributes.num_classes: The number of classes in this dataset is 11;attributes.train_samples: The number of training samples in this dataset is 90;attributes.val_samples: The number of validation samples in this dataset is 20;attributes.train_sample_paths: The list of relative paths to the visualization images of training samples in this dataset;attributes.val_sample_paths: The list of relative paths to the visualization images of validation samples in this dataset;

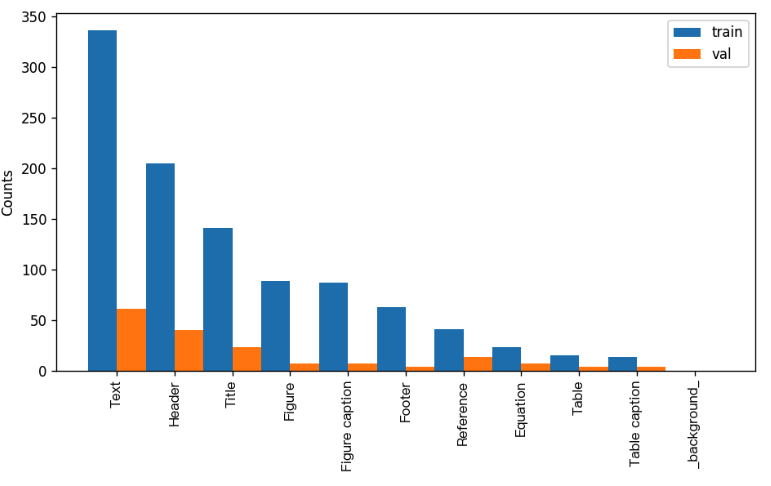

The dataset verification also analyzes the distribution of sample numbers across all classes and generates a histogram (histogram.png):

4.1.3 Dataset Format Conversion/Dataset Splitting (Optional)

After completing dataset verification, you can convert the dataset format or re-split the training/validation ratio by modifying the configuration file or appending hyperparameters.

👉 Details on Format Conversion/Dataset Splitting (Click to Expand)

(1) Dataset Format Conversion

Layout detection does not support data format conversion.

(2) Dataset Splitting

Parameters for dataset splitting can be set by modifying the

CheckDatasetsection in the configuration file. Examples of some parameters in the configuration file are as follows:CheckDataset:split:enable: Whether to re-split the dataset. Set toTrueto enable dataset splitting, default isFalse;train_percent: If re-splitting the dataset, set the percentage of the training set. The type is any integer between 0-100, ensuring the sum withval_percentis 100;

For example, if you want to re-split the dataset with a 90% training set and a 10% validation set, modify the configuration file as follows:

...... CheckDataset: ...... split: enable: True train_percent: 90 val_percent: 10 ......Then execute the command:

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=check_dataset \ -o Global.dataset_dir=./dataset/det_layout_examplesAfter dataset splitting, the original annotation files will be renamed to

xxx.bakin the original path.The above parameters can also be set by appending command-line arguments:

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=check_dataset \ -o Global.dataset_dir=./dataset/det_layout_examples \ -o CheckDataset.split.enable=True \ -o CheckDataset.split.train_percent=90 \ -o CheckDataset.split.val_percent=104.2 Model Training

A single command is sufficient to complete model training, taking the training of PP-DocLayout-L as an example:

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=train \ -o Global.dataset_dir=./dataset/det_layout_examplesThe steps required are:

- Specify the path to the

.yamlconfiguration file of the model (here it isPP-DocLayout-L.yaml,When training other models, you need to specify the corresponding configuration files. The relationship between the model and configuration files can be found in the PaddleX Model List (CPU/GPU)) - Specify the mode as model training:

-o Global.mode=train - Specify the path to the training dataset:

-o Global.dataset_dir - Other related parameters can be set by modifying the

GlobalandTrainfields in the.yamlconfiguration file, or adjusted by appending parameters in the command line. For example, to specify training on the first two GPUs:-o Global.device=gpu:0,1; to set the number of training epochs to 10:-o Train.epochs_iters=10. For more modifiable parameters and their detailed explanations, refer to the PaddleX Common Configuration Parameters for Model Tasks. - New Feature: Paddle 3.0 support CINN (Compiler Infrastructure for Neural Networks) to accelerate training speed when using GPU device. Please specify

-o Train.dy2st=Trueto enable it.

👉 More Details (Click to Expand)

- During model training, PaddleX automatically saves model weight files, defaulting to

output. To specify a save path, use the-o Global.outputfield in the configuration file. - PaddleX shields you from the concepts of dynamic graph weights and static graph weights. During model training, both dynamic and static graph weights are produced, and static graph weights are selected by default for model inference.

-

After completing the model training, all outputs are saved in the specified output directory (default is

./output/), typically including: -

train_result.json: Training result record file, recording whether the training task was completed normally, as well as the output weight metrics, related file paths, etc.; train.log: Training log file, recording changes in model metrics and loss during training;config.yaml: Training configuration file, recording the hyperparameter configuration for this training session;.pdparams,.pdema,.pdopt.pdstate,.pdiparams,.json: Model weight-related files, including network parameters, optimizer, EMA, static graph network parameters, static graph network structure, etc.;- Notice: Since Paddle 3.0.0, the format of storing static graph network structure has changed to json(the current

.jsonfile) from protobuf(the former.pdmodelfile) to be compatible with PIR and more flexible and scalable.

4.3 Model Evaluation

After completing model training, you can evaluate the specified model weight file on the validation set to verify the model's accuracy. Using PaddleX for model evaluation, you can complete the evaluation with a single command:

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=evaluate \ -o Global.dataset_dir=./dataset/det_layout_examplesSimilar to model training, the process involves the following steps:

- Specify the path to the

.yamlconfiguration file for the model(here it'sPP-DocLayout-L.yaml) - Set the mode to model evaluation:

-o Global.mode=evaluate - Specify the path to the validation dataset:

-o Global.dataset_dirOther related parameters can be configured by modifying the fields underGlobalandEvaluatein the.yamlconfiguration file. For detailed information, please refer to PaddleX Common Configuration Parameters for Models。

👉 More Details (Click to Expand)

When evaluating the model, you need to specify the model weights file path. Each configuration file has a default weight save path built-in. If you need to change it, simply set it by appending a command line parameter, such as

-o Evaluate.weight_path=./output/best_model/best_model/model.pdparams.After completing the model evaluation, an

evaluate_result.jsonfile will be generated, which records the evaluation results, specifically whether the evaluation task was completed successfully, and the model's evaluation metrics, including AP.4.4 Model Inference

After completing model training and evaluation, you can use the trained model weights for inference predictions. In PaddleX, model inference predictions can be achieved through two methods: command line and wheel package.

4.4.1 Model Inference

To perform inference predictions through the command line, simply use the following command. Before running the following code, please download the demo image to your local machine.

python main.py -c paddlex/configs/modules/layout_detection/PP-DocLayout-L.yaml \ -o Global.mode=predict \ -o Predict.model_dir="./output/best_model/inference" \ -o Predict.input="layout.jpg"Similar to model training and evaluation, the following steps are required:

Specify the

.yamlconfiguration file path of the model (here it isPP-DocLayout-L.yaml)Set the mode to model inference prediction:

-o Global.mode=predictSpecify the model weights path:

-o Predict.model_dir="./output/best_model/inference"Specify the input data path:

-o Predict.input="..."Other related parameters can be set by modifying the fields underGlobalandPredictin the.yamlconfiguration file. For details, please refer to PaddleX Common Model Configuration File Parameter Description.Alternatively, you can use the PaddleX wheel package for inference, easily integrating the model into your own project. To integrate, simply add the

model_dir="/output/best_model/inference"parameter to thecreate_model(model_name=model_name, kernel_option=kernel_option)function in the quick integration method from Step 3.

4.4.2 Model Integration

The model can be directly integrated into PaddleX pipelines or into your own projects.

Pipeline Integration The structure analysis module can be integrated into PaddleX pipelines such as the General Table Recognition Pipeline and the Document Scene Information Extraction Pipeline v3 (PP-ChatOCRv3-doc). Simply replace the model path to update the layout area localization module. In pipeline integration, you can use high-performance inference and serving deployment to deploy your model.

Module Integration The weights you produce can be directly integrated into the layout area localization module. You can refer to the Python example code in the Quick Integration section, simply replacing the model with the path to your trained model.

You can also use the PaddleX high-performance inference plugin to optimize the inference process of your model and further improve efficiency. For detailed procedures, please refer to the PaddleX High-Performance Inference Guide.